High Efficiency Video Coding (HEVC), also known as H.265 codec, was released in January 2013 as a significant advancement in video compression. HEVC codec delivers high-quality video with reduced file sizes and lower delivery costs, making it ideal for handling high-resolution 4K and 8K videos. Developed as an open standard by ITU-T’s VCEG and ISO/IEC MPEG, HEVC achieves up to 50% better compression than AVC and 75% compared to MPEG-2, revolutionizing video streaming and transmission with cost-efficient, high-quality playback.

This technology has become the preferred standard for video coding for various industries due to its ability to efficiently manage video data. HEVC allows for substantial memory savings and reduced delivery costs while maintaining video quality. Its compression capabilities also lower the bitrates necessary for video transfer or streaming, enabling the smooth handling of high-resolution formats such as 4K and 8K.

Table of Contents:

Fundamentals of video compression technology

People now seek higher video quality, including better resolution, smoother playback, and more vivid details, while also wanting greater access to content. Video creation has expanded beyond professional studios to include personal creators, video calls, home security, and wearable cameras. This surge puts a heavy strain on global communication networks and storage, making technologies like HEVC (High Efficiency Video Coding) crucial for reducing this load.

AVC (Advanced Video Coding) currently leads global video compression but is expected to be surpassed by the newer HEVC, which can halve the data size of AVC without losing quality. This shift mirrors how AVC replaced MPEG-2, which, despite pioneering digital TV, was gradually overtaken due to AVC’s higher efficiency. HEVC supports modern needs for higher resolutions and improved quality, making it a key development for better video streaming and storage.

Explore More ✅

No more leaks or unauthorized sharing. Secure your premium videos with DRM encryption and our proprietory piracy blocker tool.

Video compression works by eliminating redundant data from a raw video stream, allowing the encoded video stream to be stored or transmitted more efficiently. The encoding process involves algorithms that strip away unnecessary information, with the time taken for this process, especially in live broadcasts, being referred to as encoding latency. Decoding is the reverse process, where the encoded video is reconstructed to closely match the original raw content.

The core of a codec lies in its ability to compress and decompress data. Each video compression standard, such as MPEG-2, AVC, and HEVC, offers different outcomes in terms of bitrates and quality.

The algorithms for decoding are precisely defined within each codec standard, and standards generally emphasize decoder specifications. For compatibility and proper decoding of compliant video streams, it is necessary for the decoder path to align with the standard guidelines. Encoders, which provide the necessary data for decoders to follow, can differ across vendors and even among different products by the same vendor. These variations arise due to different tool implementations within the standard, influenced by market requirements, platform limitations, or design trade-offs made during development.

Encoders are complex, functioning as “black boxes” that make informed decisions within a wide operational range. As such, encoders for a single codec, such as HEVC, are not identical, even though they adhere to the same underlying principles. The encoding process typically involves:

- Processing each frame by dividing it into pixel blocks for parallel handling.

- Identifying and utilizing spatial redundancies within a frame using spatial prediction and other coding methods.

- Analyzing temporal frames to identify changes between consecutive frames, using motion estimation and compensation to generate motion vectors that describe these changes.

- Encoding only the differences between original and predicted blocks through quantization, transformation, and entropy coding.

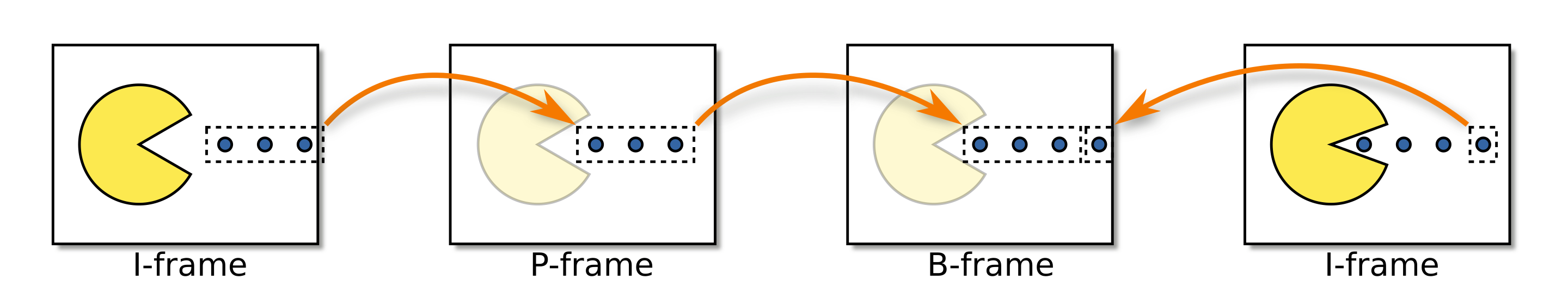

Video sequence frame types

Video frames are categorized into I-frames, P-frames, and B-frames. The encoder optimizes

bitrates by using these frame types strategically to eliminate temporal and spatial redundancies. By predicting and replacing repeated data blocks with encoded versions of original data, the encoder reduces file sizes and transmission bitrates.

In high-motion videos, like sports footage, moving elements are prioritized over static ones. Motion compensation algorithms predict object movements and generate motion vectors, allowing the encoder to use fewer bits by referencing previously encoded data when matching occurs. When a block’s position shifts, motion vectors replace the full block data, conserving bandwidth.

- I-frame, or Intra-coded picture, is a fully encoded image that can be decoded independently without any reference to other frames. It is similar to standalone image formats like JPG or BMP, as it represents a complete visual frame.

- P-frame, or Predicted picture, is different because it only contains data about the changes from the last frame. For instance, in a video where a car moves across a stationary background, the P-frame only needs to encode the car’s movement while leaving the unchanged background out. This saves storage and bandwidth since the redundant background information is not repeated in each frame. Due to this, P-frames are also referred to as delta-frames, as they encode the “delta,” or difference, from a previous frame.

- B-frame, or Bidirectional predicted picture, takes compression a step further by referencing both the previous and the next frames. It identifies the differences between these frames to predict the current content. This approach allows B-frames to save even more space because they can make use of data from multiple reference points to minimize redundancy.

Introduction to High Efficiency Video Coding (HEVC)

In recent years, 4K, ultra high-definition (UHD), and higher-resolution video content have experienced substantial growth. 4K/UHD (either 4096×2160 or 3840×2160) increases the resolution fourfold compared to full-HD (FHD, 1920×1080), delivering significantly enhanced clarity and detailed visuals. These high-resolution video formats are known to improve the quality of experience (QoE) for viewers and have become common in the consumer market.

Despite the potential QoE improvements, 4K/UHD video presents significant challenges in distribution due to the higher data rates involved. Effective video compression technologies are essential to manage these data rates and ensure the content can be efficiently transmitted within existing distribution infrastructures. The most widely used H.264 Advanced Video Coding (AVC) standard struggles to meet these requirements for high-resolution video. In response, modern video codecs like H.265 High Efficiency Video Coding (HEVC), AOMedia Video 1 (AV1), and Audio Video Coding Standard (AVS2) have been developed, specifically optimized for compressing 4K and higher-resolution content.

HEVC was introduced to advance video compression by:

- Bitrate reduction by 50% compared to AVC while maintaining consistent video quality.

- Enhancing video quality at a set bit-rate.

- Provides double the compression efficiency compared to H.264/AVC.

- Supports high-performance outputs, including Ultra-HD 8K resolution at 120 frames per second, with optimized power usage.

- Incorporates features designed for easy implementation, such as built-in parallel processing capabilities.

- Reduces the strain on global network infrastructures.

- Smoother streaming of HD video content to mobile devices.

- Addresses the demands of evolving screen resolutions, such as Ultra-HD.

| Feature | H.263 (1st Gen) | AVC/H.264 (2nd Gen) | HEVC/H.265 (3rd Gen) |

| Development Period | 1993-1998 | 1999-2009 | 2010-2013 |

| Primary Use | Low-bitrate communication | Broad HD and FHD content | High-resolution (4K/UHD) content |

| Coding Structure | Macroblock-based | Macroblock-based | Quadtree-based CTU |

| Intra-Prediction Modes | Limited | 8 modes | 35 modes |

| Motion Compensation | Limited B-picture use (HLP) | Quarter-sample precision, multiple refs | Quarter-sample precision, merge mode |

| Transform Sizes | Basic | 4×4 and 8×8 | Up to 32×32 |

| Entropy Coding | Basic VLC | CAVLC and CABAC | CABAC |

| Artifact Reduction | None | Deblocking filter | Deblocking filter, SAO |

| Parallel Processing | Not supported | Limited | Slices, tiles, wavefronts |

| Resolution Focus | Low-resolution | Up to FHD | 4K/UHD |

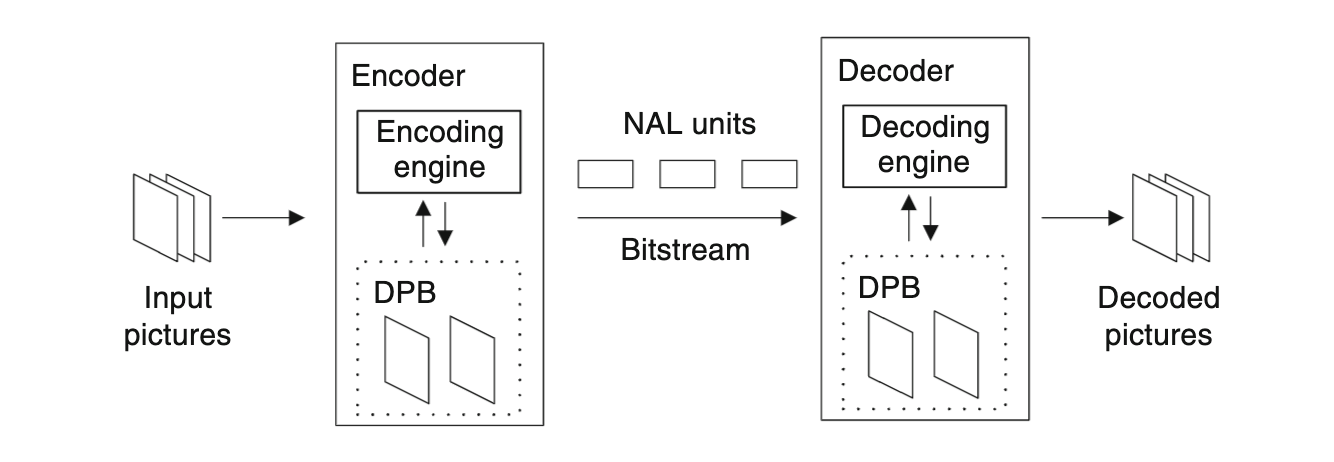

HEVC (H.265 Codec) encoder and decoder

The below figure illustrates how the HEVC encoder and decoder work. Input pictures are processed by the encoder, which converts them into a bitstream made up of network abstraction layer (NAL) units. Each NAL unit contains a specific number of bytes, with the first two bytes forming the header and the rest holding the payload. Some NAL units provide control information applicable to full pictures, while others contain encoded picture data.

The decoder processes these NAL units to reconstruct the output pictures. Both the encoder and decoder use a decoded picture buffer (DPB) to store images for future reference, which helps in generating prediction signals for encoding other pictures. These stored images are known as reference pictures.

HEVC divides each picture into one or more slices. Slices are coded independently, meaning the data within a slice does not depend on other slices in the picture. A slice can be made of multiple segments, with the first segment being independent and called an independent slice segment. Additional segments are called dependent slice segments as they rely on the data from previous segments.

Each segment has a header containing control information and the segment data itself, which holds the encoded content. The header of the independent slice segment, known as the slice header, applies to all segments of that slice.

Entropy coding in HEVC

Entropy coding is a lossless data compression method that leverages the statistical properties of data to minimize the number of bits required for representation. It ensures that more frequently occurring data is encoded using fewer bits, while less common data takes more bits. This method is applied at the last stage of video encoding and the first stage of decoding. After video content is broken down into syntax elements, which include prediction details and residual signals. Entropy coding is used to compress these elements efficiently.

CABAC (Context-Based Adaptive Binary Arithmetic Coding)

CABAC, first introduced in the H.264/AVC standard, is a form of entropy coding that is also used in HEVC (High Efficiency Video Coding). It is known for its high compression efficiency due to its advanced use of binary arithmetic coding. CABAC works by transforming syntax elements into binary symbols (called bins) through a process known as binarization. It then applies context modeling to estimate the probability of these bins, allowing the arithmetic coding to compress them based on their probabilities.

While CABAC provides excellent compression efficiency, it poses challenges for parallel processing due to its data dependencies, which can limit processing speed. In HEVC, improvements were made to CABAC to address these throughput challenges while maintaining high coding efficiency.

CAVLC (Context-Adaptive Variable-Length Coding)

CAVLC is another entropy coding method included in the H.264/AVC standard. It is a simpler alternative to CABAC and works by using variable-length codes that switch adaptively based on context. This method is less complex to implement, making it more efficient in terms of processing power. However, it is less efficient in compression compared to CABAC. The bit-rate overhead for CAVLC relative to CABAC is typically between 10–22%, depending on the video resolution and profile used, such as standard definition (SD) or high definition (HD) 1080p.

| Feature | CABAC (Context-Based Adaptive Binary Arithmetic Coding) | CAVLC (Context-Adaptive Variable-Length Coding) |

| Compression Efficiency | Higher compression efficiency, leading to lower bit rates. | Lower compression efficiency; bit-rate overhead typically 10–22% more than CABAC. |

| Complexity | More complex to implement; involves context modeling and arithmetic coding. | Simpler and less resource-intensive; uses variable-length coding based on context. |

| Processing Speed | Slower, due to data dependencies that hinder parallel processing. | Faster processing and more parallelizable due to simpler structure. |

| Use in Standards | Used in both H.264/AVC and HEVC; the sole entropy coding method in HEVC. | Used in H.264/AVC as an alternative to CABAC. Not used in HEVC. |

| Application Suitability | Suitable for scenarios where compression efficiency is a priority, even at the cost of complexity. | Suitable for scenarios needing faster, simpler encoding at the cost of higher bit rates. |

| Bit-Rate Overhead | Minimal; provides optimal bit-rate savings. | 10–22% higher bit rate compared to CABAC for the same quality. |

| Adoption | More commonly used in professional and high-quality video applications. | More suitable for applications requiring lower complexity. |

Parallel processing in HEVC (H.265 Codec)

Parallel processing is essential for enabling real-time video codec operations on systems that might otherwise be unable to support such processes. Modern hardware inherently supports multi-threading, even on low-power platforms. This shift occurred as increasing CPU clock speeds became more challenging and costly, leading to the development of multi-core processors by general-purpose and ARM-based processor manufacturers. The benefits of multi-threading can only be harnessed effectively if the system allows threads to run in parallel, utilizing multiple cores or processing units.

Multi-threading in software involves breaking down computations into independent threads that share certain resources, such as memory. These threads can execute simultaneously or in a time-shared manner, contingent on hardware and software design. Communication among threads is managed via shared memory, necessitating synchronization mechanisms like locks, semaphores, barriers, and condition variables to maintain resource order and prevent conflicts.

The performance gain from multi-threading is measured as the speed-up ratio between the execution time of code on a single processing unit and its execution on ‘p’ parallel processing units. Ideally, the maximum speed-up is ‘p’. However, actual performance depends on factors like thread synchronization and memory access. Optimized implementations minimize main memory use and favor cache memory, which offers faster access despite its smaller size.

Various parallelization techniques optimize computational resources in video coding implementations:

Picture-Level Parallelization – This technique processes multiple images simultaneously, provided motion-compensated prediction dependencies are met. It is straightforward, minimally impacts coding efficiency, and is widely used in software implementations of H.264/MPEG-4 AVC. Its limitations include imbalanced core workloads due to differing encoding/decoding times and increased frame rate without latency improvement.

Slice-Level Parallelization – Images can be divided into independent slices, allowing concurrent processing. While slices in HEVC and H.264/MPEG-4 AVC facilitate parallel operations, cross-slice in-loop filtering may still introduce dependencies. Though useful, excessive slicing can reduce coding efficiency due to restricted prediction and entropy coding across slice boundaries, so it is best used in moderation.

Block-Level Parallelization – Hardware implementations often use a macroblock-level pipeline, assigning different processing cores to tasks like entropy coding, in-loop filtering, and intra prediction, allowing concurrent macroblock processing. This approach, however, may require sophisticated scheduling due to spatial dependencies between macroblocks. The wavefront scheduling technique groups macroblocks into wavefronts, enabling concurrent processing while respecting dependencies. While block-level parallelization can be extended to multi-core systems, decoupling entropy decoding for parallelization can limit efficiency as it may increase memory usage and not significantly speed up entropy decoding itself.

To address the constraints of H.264/MPEG-4 AVC parallelization strategies, HEVC incorporates specific tools for high-level parallel processing:

- Wavefront Parallel Processing (WPP) – This technique divides a picture into CTU rows, maintaining dependencies to allow parallel processing of macroblocks while preserving coding and entropy relationships.

- Tiles – This method partitions images into rectangular CTU regions with restricted cross-partition dependencies, allowing independent processing.

Both WPP and tiles facilitate partitioned image processing, with each section being accessible by separate cores using distinct entry points indicated in the slice segment headers. This structure supports efficient and parallelized access to partitions, enhancing real-time codec capabilities.

H.263 vs HEVC vs AVC codec, which is better ?

H.263 Codec (1st Generation)

Developed from 1993-1998 for low-bitrate communication, H.263 shared similarities with H.262 (MPEG-2) but optimized for more efficient low-delay, low-bitrate coding. The subsequent H.263+ and H.263++ versions added optional annexes that improved performance. The most effective profiles were the Conversational High Compression (CHC) for low-delay use and the High Latency Profile (HLP) with B-picture support for higher-latency scenarios.

AVC/H.264 Codec (2nd Generation)

Developed between 1999-2009, AVC/H.264 enhanced flexibility with macroblock partitioning and multiple reference frames. It introduced quarter-sample precision motion vectors and advanced intra-prediction in the spatial domain. The codec featured transform coding with 4×4 and 8×8 transforms and exact integer operations for precise reconstructions. Two entropy coding methods, CAVLC and CABAC, improved compression efficiency, with CABAC being more advanced. The deblocking filter further reduced artifacts, and its High Profile supported 8-bit 4:2:0 video.

HEVC/H.265 Codec (3rd Generation)

Developed from 2010-2013, HEVC used a quadtree-based coding tree unit (CTU) structure for greater adaptability. It expanded intra-prediction to 35 modes and allowed block sizes up to 32×32. Motion compensation included quarter-sample precision with enhanced filters. HEVC’s merge mode reduced motion parameter coding. Added features like sample-adaptive offset (SAO) and parallel processing capabilities (slices, tiles, wavefronts) improved coding efficiency, supporting higher resolutions like 4K and UHD.

| Feature | H.263 (1st Gen) | AVC/H.264 (2nd Gen) | HEVC/H.265 (3rd Gen) |

| Development Period | 1993-1998 | 1999-2009 | 2010-2013 |

| Primary Use | Low-bitrate communication | Broad HD and FHD content | High-resolution (4K/UHD) content |

| Coding Structure | Macroblock-based | Macroblock-based | Quadtree-based CTU |

| Intra-Prediction Modes | Limited | 8 modes | 35 modes |

| Motion Compensation | Limited B-picture use (HLP) | Quarter-sample precision, multiple refs | Quarter-sample precision, merge mode |

| Transform Sizes | Basic | 4×4 and 8×8 | Up to 32×32 |

| Entropy Coding | Basic VLC | CAVLC and CABAC | CABAC |

| Artifact Reduction | None | Deblocking filter | Deblocking filter, SAO |

| Parallel Processing | Not supported | Limited | Slices, tiles, wavefronts |

| Resolution Focus | Low-resolution | Up to FHD | 4K/UHD |

HEVC vs AVR – cost and bandwidth utilization

The shift from older, widely used video codecs like H.264 (AVC) to newer codecs such as AV1 or HEVC (H.265) is often justified by the promise of improved encoding efficiency, which leads to bandwidth and cost savings. However, the real-world experience of adopting newer codecs varies. While higher resolutions like HD and 4K often show substantial savings, ranging from 25% to 40%. The benefits become less significant at lower resolutions, leading to average overall bitrate savings of around 15% to 20%.

Cost savings related to bandwidth are not straightforward due to various influencing factors. Increased compute costs for HEVC encoding can offset some of the benefits, although advancements in cloud infrastructure and better encoder technology are helping to bridge the gap. The use of parallelized encoding across multiple machines helps manage these compute costs, making HEVC more feasible and affordable.

Caching efficiency also plays a role but generally does not present significant drawbacks. Since most devices continue to support older formats like AVC, which are highly cached, and a large portion also supports HEVC, overall caching efficiency remains high, with minimal negative impact.

Ultimately, the relationship between codec efficiency and cost is complex. Savings from reduced bitrates do not directly translate to proportional CDN cost reductions, as network and bandwidth limitations impact how much data users can actually receive. For instance, lowering the bitrate from 6 Mbps to 4.5 Mbps for a 1080p encode may not benefit users with slower connections, who will still receive data at their maximum supported rate. This results in real CDN savings that may be closer to 5% to 10% instead of the full potential 20%, highlighting that these savings are not a one-to-one correlation.

FAQs

What is HEVC?

HEVC, which stands for High Efficiency Video Coding, also known as H.265, is a video compression standard that significantly improves data compression compared to its predecessor, H.264 (AVC). This technology compresses video files to smaller sizes while retaining high-quality visuals, making it particularly beneficial for streaming and storing high-definition formats such as 4K and 8K.

What is HEVC Video Extensions?

HEVC Video Extensions is a codec package provided by Microsoft that enables Windows devices to play videos encoded with the HEVC (H.265) standard. This extension is essential for devices or software that do not natively support HEVC playback and can typically be downloaded from the Microsoft Store, though some Windows versions may include it pre-installed.

How to convert HEVC to MP4?

To convert HEVC to MP4, you can use video conversion software like HandBrake, VLC Media Player, or FFmpeg, all of which support HEVC input and output to MP4 format. For example, with HandBrake, you import the HEVC file, select the MP4 container, adjust any output settings, and start the conversion. VLC Media Player allows you to convert by navigating to ‘Media’ > ‘Convert/Save’, adding the HEVC file, choosing the MP4 profile, and initiating the process. Alternatively, online tools like CloudConvert or Online-Convert.com offer simple, software-free HEVC to MP4 conversions.

HEVC vs 1080p?

HEVC and 1080p refer to different aspects of video technology; HEVC (H.265) is a video compression format, while 1080p denotes a resolution (1920 x 1080 pixels) indicating Full HD quality. HEVC allows for more efficient compression, significantly reducing file sizes while preserving video quality, and can be applied to various resolutions including 1080p. On the other hand, 1080p simply describes the number of pixels and the resolution quality. Using HEVC to compress 1080p content results in smaller file sizes compared to older compression standards like H.264, without sacrificing the visual experience.

Image source: Wikipedia

Supercharge Your Business with Videos

At VdoCipher we maintain the strongest content protection for videos. We also deliver the best viewer experience with brand friendly customisations. We'd love to hear from you, and help boost your video streaming business.

Jyoti began her career as a software engineer in HCL with UNHCR as a client. She started evolving her technical and marketing skills to become a full-time Content Marketer at VdoCipher.

Leave a Reply